via YouTube

In this video from September 2016, Mike Rugnetta responds to concerns about Facebook which arose in 2016:

- May 2016: reports of Facebook suppressing conservative views

- August 2016: editorial/news staff replaced with algorithm

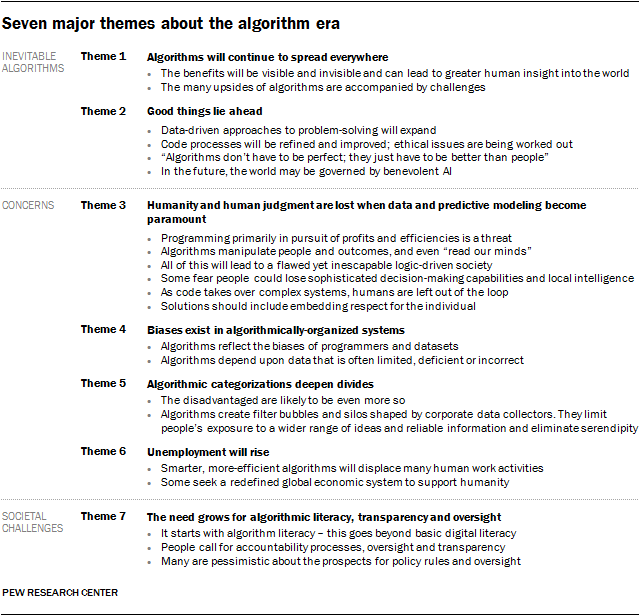

He asks, primarily, why we expect Facebook to be unbiased, given that any news source is subject to editorial partiality, and connects their move to separate themselves from their editorial role through the employment of algorithms to ‘mathwashing’ (Fred Benson), or the use of math terms such as ‘algorithm’ to imply objectivity and impartiality, and the assumption that computers do not have bias, despite being programmed by humans with bias, and being reliant on data.. with bias.

Facebook’s sacking of their human team and movement to reliance on algorithms is demonstrative of one of Gillespie’s assertions, except that in Facebook’s case a reputation of neutrality was sought through the reputation of algorithms in general:

The careful articulation of an algorithm as impartial (even when that characterization is more obfuscation than explanation) certifies it as a reliable sociotechnical actor, lends its results relevance and credibility, and maintains the provider’s apparent neutrality in the face of the millions of evaluations it makes.

(2012, p. 13 of the linked to PDF)

In the video, Rugnetta suggests there’s a need to abandon the myth of algorithmic neutrality. True – but we also need greater transparency. With so much information available we need some kind of sorting mechanism, and we also need to know (and be able to tweak) the criteria if we are to be in control of our civic participation.